In Memory of Daniel Bristot de Oliveira

This post falls in the category of things I never thought nor wanted to write: we lost Daniel this week due to some probable heart failure.

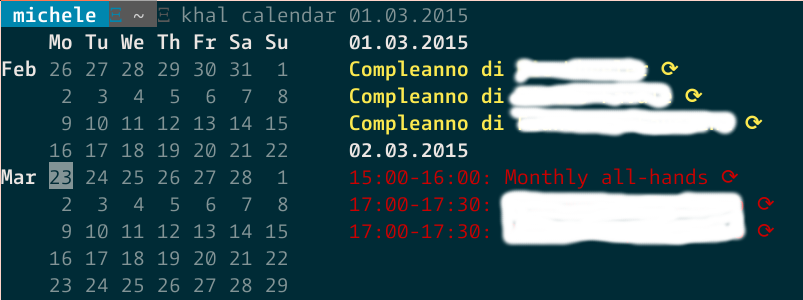

I met Daniel in Red Hat's internal IRC #italy channel. It must have been around 2014-2015. We were both in support engineering at the time and we chatted about some kernel issues, but on the side we started chatting about music, sports, life and everything else.

At the time he was planning to move from Florianopolis to Italy to do his PhD. He was also quite keen to learn about the places his grandparents were from, namely the area around Belluno.

Once he moved to Italy we helped him out with a few bureaucratic hiccups he was stuck in and we slapped his name on our doorbell as he needed an initial address to get started:

I still remember the first time he came to visit me here in South Tyrol, because he was quite mesmerized by the climate, the mountains and the sauna experience ("just like being in Brazil in a car with no aircon and naked" he would say). We went sledding and he decided to sled down the mountain with a brazilian flag attached to his backpack. It caused quite the stares that day and it made me laugh a lot.

We went to watch his first ice hockey game:

And we took the kids to Innsbruck for a day trip:

Initially we always spoke or chatted in English, but already after a few months he learned italian enough to converse and we switched to that. His mixture of portuguese inflection and the accent from Tuscany was always a good source of banter. He introduced me to cooking Picanha and to rock gaucho. Engenheiros do Hawaii became a mainstay in my car, which is something he was quite proud of.

We also used to meet at the x-mas parties at the Milan office where we always had loads of fun and probably way too much booze.

We enjoyed chatting about all the big life questions, quoting old terrible

italian B movies and sending stupid memes to each other. Last time he dropped by

we joked about the fact that my older kid became taller than him:

We had the usual BBQ with some picanha:

And then, with Bianca, we went to a mountain hut:

Daniel, you had plans to visit Japan this summer, to marry Bianca and to have kids. All this is now shattered and I am at loss for words. You called me your "older italian brother" and I was secretly very proud of that title.

You will be sorely missed. Rest in peace.