TripleO on NUCs

Being a happy owner of a couple of Intel DC53427HYE NUCs NUCs, I decided I would install TripleO on them.

My setup consists of the following:

- Undercloud (nuc1.int.rhx) with two interfaces (the internal eno1 connected to my home LAN-172.16.11.0/20 and an additional USB-Ethernet dongle enp0s29u1u5u1 which is connected to a separate segment where the other two NUCs are connected-192.0.2.0/24)

- Two NUCs on which the AMT IP address has been configured as 192.0.2.100 (nuc2) and 192.0.2.101 (nuc3)

I won't delve too much into the details of TripleO, see the official docs if you need more information: http://tripleo.org/, but here are the steps I took after installing Centos 7.2 and applying this http://acksyn.org/files/tripleo/nuc.patch.

Steps

yum install -y vim tmux wsmancli cat > /etc/hosts <<EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 nuc1 nuc1.int.rhx ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 EOF useradd stack echo "foobar" | passwd --stdin stack echo "stack ALL=(root) NOPASSWD:ALL" | sudo tee -a /etc/sudoers.d/stack sudo chmod 0440 /etc/sudoers.d/stack su - stack sudo yum -y install epel-release yum-plugin-priorities sudo curl -o /etc/yum.repos.d/delorean.repo http://trunk.rdoproject.org/centos7/current-tripleo/delorean.repo sudo curl -o /etc/yum.repos.d/delorean-current.repo http://trunk.rdoproject.org/centos7/current/delorean.repo sudo sed -i 's/\[delorean\]/\[delorean-current\]/' /etc/yum.repos.d/delorean-current.repo sudo /bin/bash -c "cat <<EOF>>/etc/yum.repos.d/delorean-current.repo includepkgs=diskimage-builder,openstack-heat,instack,instack-undercloud,openstack-ironic,openstack-ironic-inspector,os-cloud-config,os-net-config,python-ironic-inspector-client,python-tripleoclient,tripleo-common,openstack-tripleo-heat-templates,openstack-tripleo-image-elements,openstack-tuskar-ui-extras,openstack-puppet-modules EOF" sudo curl -o /etc/yum.repos.d/delorean-deps.repo http://trunk.rdoproject.org/centos7/delorean-deps.repo sudo yum install -y python-tripleoclient cat > ~/undercloud.conf <<EOF [DEFAULT] local_interface = enp0s29u1u5u1 [auth] EOF openstack undercloud install 2>&1 | tee undercloud_install.log # build images export NODE_DIST=centos7 export USE_DELOREAN_TRUNK=1 export DELOREAN_TRUNK_REPO="http://trunk.rdoproject.org/centos7/current-tripleo/" export DELOREAN_REPO_FILE="delorean.repo" time openstack overcloud image build --all 2>&1 | tee build_images.log openstack overcloud image upload cat > instackenv.json << EOF { "nodes":[ { "_comment":"nuc2", "pm_type":"pxe_amt", "mac": [ "ec:a8:6b:fa:65:c7" ], "cpu": "4", "memory": "7500", "disk": "100", "arch": "x86_64", "pm_user":"admin", "pm_password":"foobar", "pm_addr":"192.0.2.100" }, { "_comment":"nuc3", "pm_type":"pxe_amt", "mac": [ "b8:ae:ed:71:3e:20" ], "cpu": "4", "memory": "7500", "disk": "100", "arch": "x86_64", "pm_user":"admin", "pm_password":"foobar", "pm_addr":"192.0.2.101" } ] } EOF json_verify < instackenv.json openstack baremetal import --json instackenv.json openstack baremetal configure boot openstack baremetal introspection bulk start openstack overcloud deploy --templates

Expected Outcome

If everything goes according to plan, we shall expect to see this message:

2016-03-03 16:34:32 [overcloud-BlockStorageNodesPostDeployment-zdtbamwswrr2]: CREATE_COMPLETE Stack CREATE completed successfully Stack overcloud CREATE_COMPLETE

Caveats

-

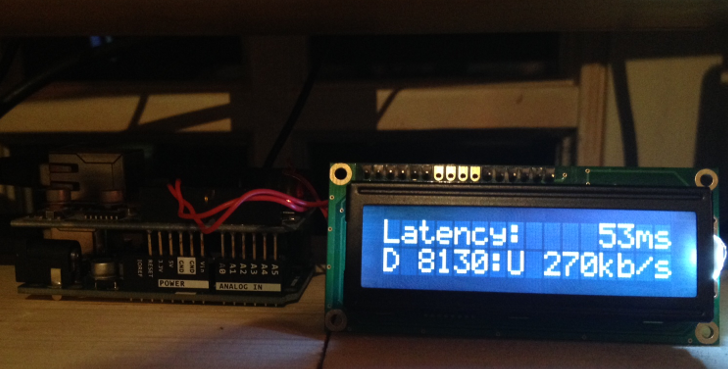

Sleep modes. Because I turn off the NUCs via a power switch and because it puts the AMT processor in sleep mode and it needs an ICMP packet to wake up - which can take up to 25 seconds - I just went ahead and disabled the AMT Power Saving Policy by choosing: "Mobile: ON in S0". If you do not do this you might have to tweak the max_attempts and the action_wait parameters under amt in /etc/ironic/ironic.conf on the undercloud.

-

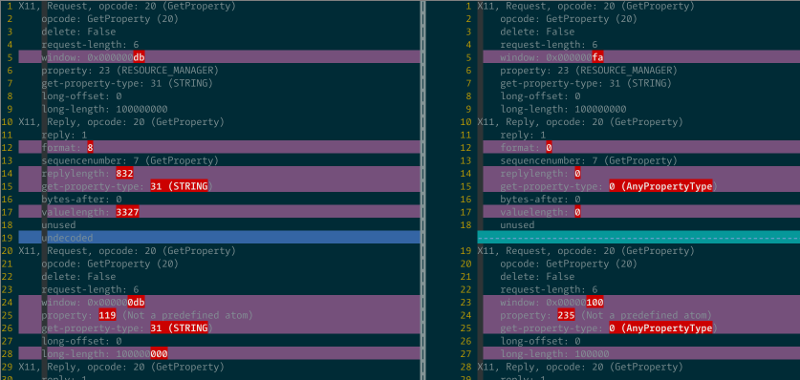

If most ironic operations fail and you see the following error in the logs:

2016-03-02 22:06:28.433 23958 WARNING ironic.conductor.manager [-] During sync_power_state, could not get power state for node 8afb31f9-6cef-4629-8a2a-5822a968b15d, attempt 1 of 3. Error: Wrong number or type of arguments for overloaded function #'new_Client'. Possible C/C++ prototypes are: _WsManClient::_WsManClient(char const ) _WsManClient::_WsManClient(char const ,int const,char const ,char const ,char const ,char const )

It is because the protocol string (in this case 'http') passed from ironic to the driver is actually u"http" which confuses the openwsman SWIG bindings. This is something I need to look at in more detail. For a quick fix I just hardcoded the proper string in parse_driver_info in ironic/drivers/modules/amt/common.py.

-

AMT seems to be flakey at times. While most of my deployments worked, a couple of times the AMT would just go on strike and some of the deployment steps would fail due to timeouts. I will also see if firmware updates will fix this. I haven't hit this https://bugs.launchpad.net/ironic/+bug/1454492 yet, but it seems that it can easily happen. Apparently a new python-only AMT driver is in the works.

Future

Over the next days, or better evenings, I will cleanup up the (trivial) patches so this all works out of the box. The changes are quite minimal but I need to kill the wsmancli dependency and test things a bit more (I also need to retest everything with openwsman 2.4 as shipped by CentOS, because I used a locally installed 2.6.2 version while chasing the unicode parameter bug mentioned above). I hope this saves some time to anyone trying the same ;)